French AI startup Mistral AI has released its latest model, the Mixtral 8x22B, a significant advancement for open-source artificial intelligence technology. This new model sets a high standard for performance and efficiency, positioning itself as a leader among open-source AI models.

Unparalleled Efficiency and Multilingual Capabilities

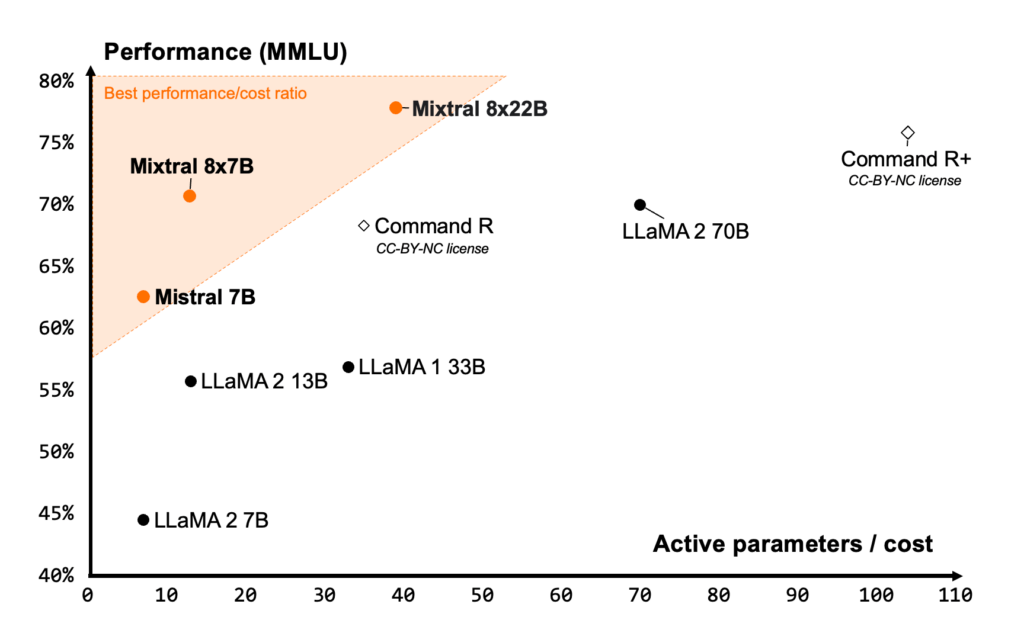

The Mixtral 8x22B operates remarkably efficiently and utilizes a cutting-edge Sparse Mixture-of-Experts (SMoE) framework. Unlike traditional models that use dense parameter activation, Mixtral 8x22B requires only 39 billion of its 141 billion parameters to function. This approach speeds up processing and significantly reduces computational costs while maintaining high performance.

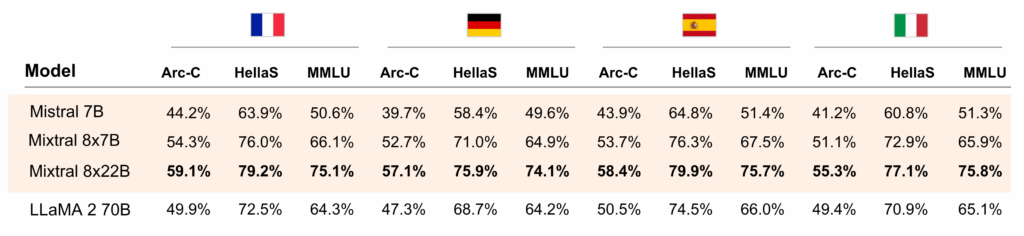

The model’s prowess extends to multilingual capabilities, fluent in major European languages such as English, French, Italian, German, and Spanish. This makes it particularly valuable for global applications, ranging from automated translation services to multicultural customer support systems.

Advanced Technical Skills and Open Licensing

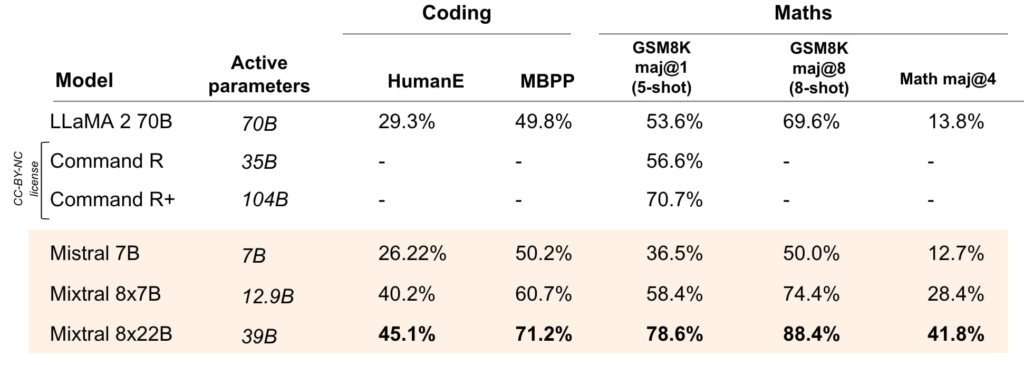

Mixtral 8x22B excels in technical domains, demonstrating superior mathematical and coding skills. The model supports native function calling and has a ‘constrained output mode,’ making it ideal for developers working on complex software projects and tech upgrades.

Mistral AI has released Mixtral 8x22B under the Apache 2.0 license to encourage innovation and collaboration within the AI community. This permissive open-source license allows unrestricted usage, fostering broader adoption and development across various sectors.

Setting New Benchmarks in AI Performance

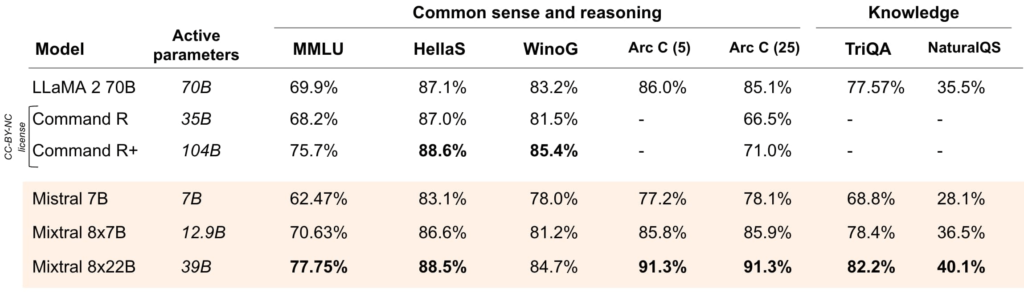

Statistical analysis reveals that Mixtral 8x22B outperforms many existing models in critical reasoning and knowledge benchmarks. Its performance in tasks requiring understanding context and logical reasoning is particularly noteworthy, making it a valuable resource for enterprises requiring deep content analysis and decision-making support.

The model also sets a new standard in AI by outclassing its competitors in coding and mathematical benchmarks. The recent release of an instructed model version further improves its performance in these areas, showcasing its potential to handle complex computational tasks easily.

Implications for the AI Landscape

With its combination of high performance, cost efficiency, and linguistic versatility, Mixtral 8x22B is poised to revolutionize AI in commercial and academic settings. Its ability to handle extensive datasets makes it particularly appealing for enterprise-level applications, where large volumes of data are the norm.

Mistral AI’s commitment to open-source technology aligns with a growing trend in the tech industry towards transparency and collaboration. By making Mixtral 8x22B freely available, Mistral AI accelerates innovation and democratizes access to cutting-edge technology.

Engage with Mixtral 8x22B

Prospective users and developers are encouraged to explore Mixtral 8x22B on La Plateforme, Mistral AI’s interactive platform. This platform provides direct access to the model, allowing users to experience its capabilities and fully integrate it into their projects.

As AI continues to shape numerous aspects of daily life and business, advancements such as the Mixtral 8x22B play a crucial role in ensuring that this technology evolves in a way that is accessible, efficient, and beneficial for all.

Source: Mistral

Like this article? Keep up to date with AI news, apps, tools and get tips and tricks on how to improve with AI. Sign up to our Free AI Newsletter

Also, come check out our free AI training portal and community of business owners, entrepreneurs, executives and creators. Level up your business with AI ! New courses added weekly.

You can also follow us on X