Zyphra, a leading AI research company, has released Zamba-7B, a significant advancement in artificial intelligence technology. This new foundation model combines high performance with remarkable efficiency. This 7-billion parameter model stands out for its innovative architecture and the potential to transform how AI models are developed and utilized.

The Essence of Zamba-7B

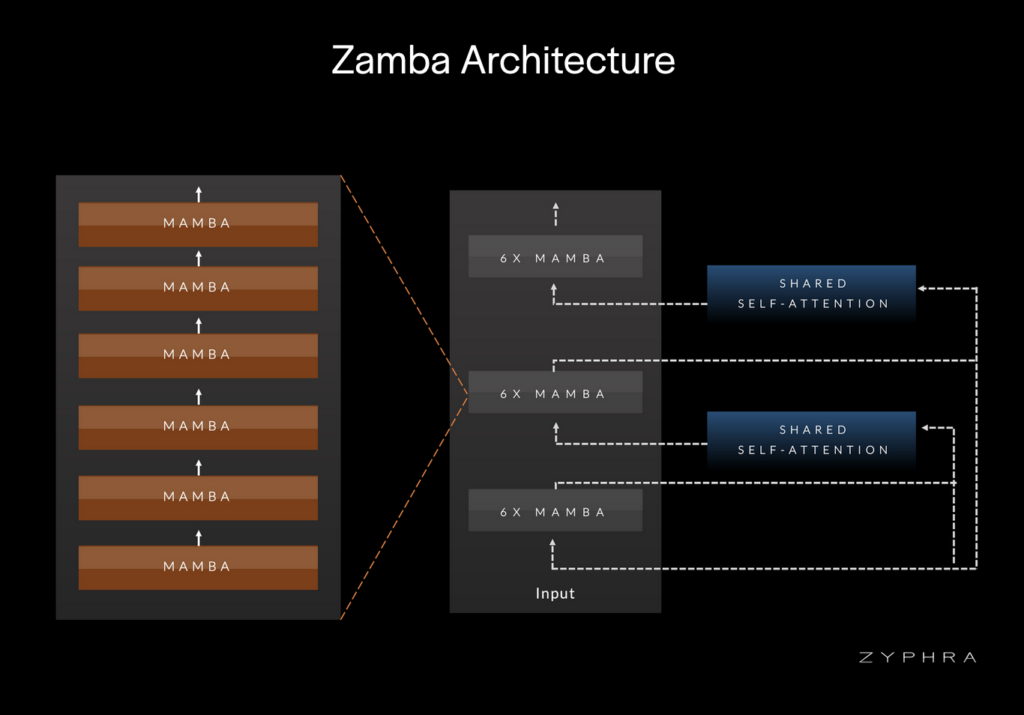

Zamba-7B is engineered to address the increasing demand for more efficient yet powerful AI models. Its architecture, a hybrid of Mamba blocks and a global shared attention layer allows it to optimize learning from long-term data dependencies. This structure is applied strategically every six Mamba blocks to enhance performance without significant computational overhead, making it efficient and practical for various applications.

Breakthroughs in AI Performance and Efficiency

One of Zamba-7B’s most notable achievements is its training efficiency. Developed by a small team of seven researchers over 30 days with 128 H100 GPUs, the model was trained on approximately 1 trillion tokens from open web datasets. This was followed by a phase using high-quality data, enhancing the model’s learning capabilities and overall performance.

Zamba-7B effectively competes with larger models like Mistral and Gemma, achieving similar performance levels with far fewer data tokens. This efficiency is a testament to its innovative design and positions it as a sustainable alternative in AI development.

Community and Collaboration through Open-Source

Zyphra has taken a commendable step by releasing all training checkpoints of Zamba-7B under the Apache 2.0 license. This initiative aims to foster transparency and collaboration within the AI community. The model’s open-source nature ensures it is accessible for further research, adaptation, and innovation by developers, researchers, and companies.

Broader Implications and Future Prospects

The development of Zamba-7B indicates a shift towards creating AI models that are not only powerful but also resource-efficient and broadly accessible. This model is particularly well-suited for operation on consumer-grade hardware, which could democratize advanced AI capabilities.

Zyphra is set to integrate Zamba-7B with Huggingface and will release a detailed technical report to aid the AI community in leveraging this model to its full potential. This forthcoming documentation will ensure that Zamba-7B is a fully reproducible research artifact, which is crucial for ongoing scientific study and enhancement of AI technologies.

Engagement and Future Directions

As AI continues to evolve, models like Zamba-7B are critical in pushing the boundaries of what is possible, making AI more accessible and efficient. Zyphra’s commitment to advancing the science of AI and its dedication to community collaboration is expected to inspire further innovations in the field.

With this new development, Zyphra contributes a tool of high scientific and practical value and reinforces the importance of sustainable, efficient, and accessible AI development for the future.

For more information about Zamba-7B and to access the resources, visit Zyphra’s official release notes and GitHub repository. This is an exciting time for AI enthusiasts and the industry as we step into a new era of technological empowerment with tools like Zamba-7B.

Source: Zyphra

Like this article? Keep up to date with AI news, apps, tools and get tips and tricks on how to improve with AI. Sign up to our Free AI Newsletter

Also, come check out our free AI training portal and community of business owners, entrepreneurs, executives and creators. Level up your business with AI ! New courses added weekly.

You can also follow us on X