In the fiercely competitive world of artificial intelligence, a new challenger has emerged that threatens to dethrone ChatGPT’s seemingly unshakable dominance. Anthropic, a Silicon Valley startup backed by tech titans like Google, Salesforce, and Amazon, has unveiled Claude 3 – a family of AI models that they boldly claim surpasses the capabilities of OpenAI’s GPT-4 and Google’s own Gemini Ultra.

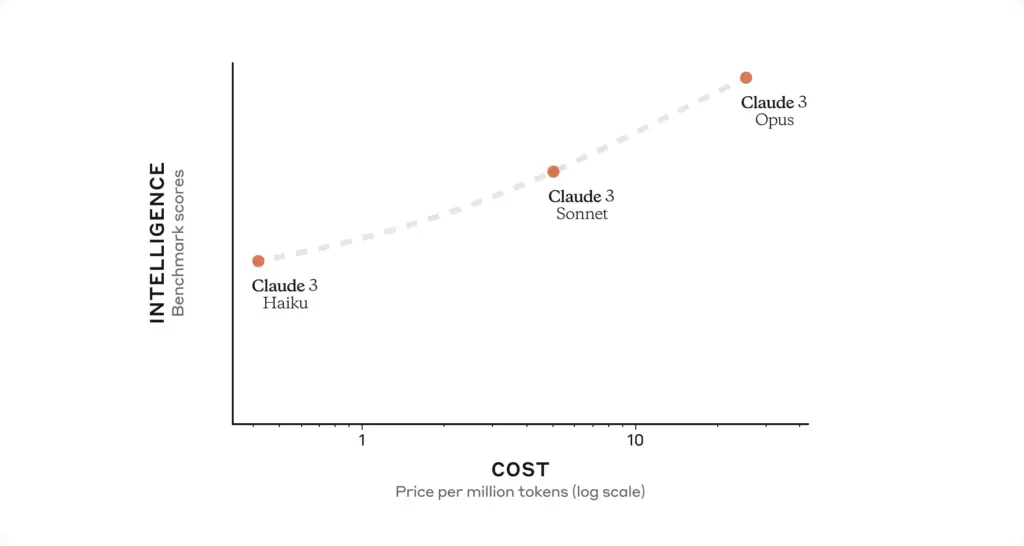

The lineup consists of three formidable contenders: Claude 3 Opus, Sonnet, and Haiku, each packing increasingly powerful punches in the realms of reasoning, comprehension, and cognitive prowess. And unlike previous iterations, these new models can seamlessly process images, charts, and documents – a game-changing multimodal capability that expands their versatility.

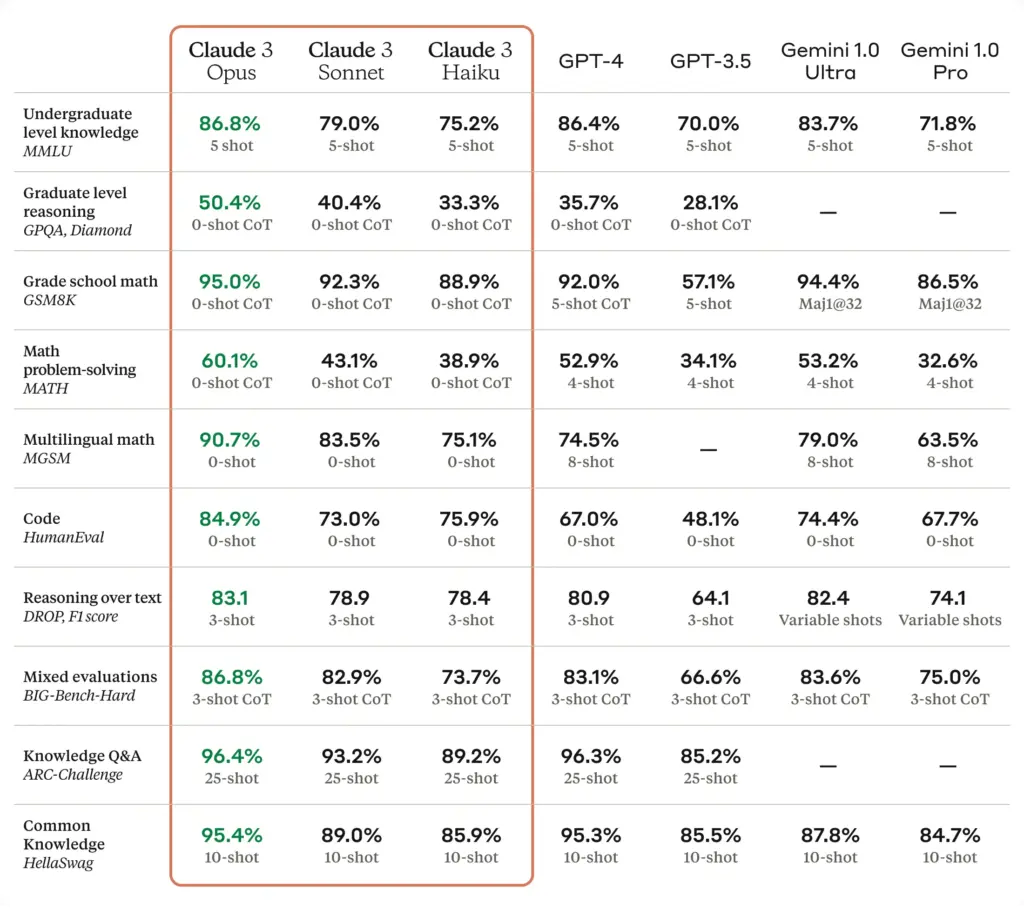

According to Anthropic, their top dog, Claude 3 Opus, isn’t just barking – it’s biting. This AI beast outperformed its rivals on a vast array of industry benchmarks, ranging from undergraduate knowledge tests to fiendishly complex reasoning challenges fit for graduate-level academics. It even exhibited a near-human grasp of nuance and fluency when tackling open-ended, sight-unseen prompts – a feat that would leave most chatbots tongue-tied.

But raw power isn’t the only trick up Claude 3’s circuitry. Speed is also a forte, with the nimble Haiku model able to comprehend dense research papers and pump out insightful responses in the blink of an eye. For those seeking a balanced blend of brains and blistering pace, the Sonnet variant promises to be twice as fast as its Claude 2 predecessors while maintaining a high intelligence quotient.

Anthropic’s co-founder, Daniela Amodei, confidently declared that their quest for increasingly capable yet safe AI models has yielded a more nuanced gatekeeper. Unlike its overtly cautious ancestor that tended to sidestep sensitive topics, Claude 3 demonstrates a refined understanding of context, refusing to engage only when real harm is imminent. This newfound discretion could spell the end of those awkward, blanket “I can’t answer that” rejections that have plagued past AI assistants.

In a market clamoring for trustworthiness at scale, Anthropic has doubled down on accuracy. Compared to Claude 2.1, Opus boasts a twofold surge in correct responses to complex, open-ended queries – a crucial milestone for companies relying on AI to serve customers without dishing out misinformation.

But the truly jaw-dropping capability lies in Claude 3’s recall prowess. In a grueling test aptly named “Needle in a Haystack,” Opus achieved a stratospheric 99% accuracy in retrieving specific information from a vast corpus. Not only that, but this AI savant even identified potential flaws in the evaluation itself – a meta-cognitive flex that hints at the tantalizing prospect of artificial general intelligence (AGI).

Of course, with great power comes great responsibility. Anthropic has assembled dedicated teams to track and mitigate a wide spectrum of AI risks, from misinformation and privacy breaches to autonomous replication and potential misuse in spheres like cybersecurity and elections. Techniques like “Constitutional AI” aim to bolster the models’ transparency and safety, while bias benchmarking continuously pushes for greater neutrality.

Despite these Herculean strides, Anthropic remains cautiously optimistic, classifying Claude 3 at the AI Safety Level 2 threshold for now. But the startup’s relentless drive to steer AI’s trajectory towards positive societal outcomes has only intensified with this bold release.

As the battle for AI supremacy rages on, one thing is certain: Claude 3 isn’t just another challenger – it’s a force to be reckoned with. And if Anthropic’s soaring ambitions are any indication, the AI revolution is merely scratching the surface of its boundless potential.

For more information about the Claude 3 models and to start building with Claude, visit anthropic.com/claude.

Source: Anthropic

Grow your business with AI. Be an AI expert at your company in 5 mins per week with this Free AI Newsletter