In a groundbreaking move poised to redefine the landscape of artificial intelligence, SambaNova has recently unveiled its latest innovation: Samba-1. This colossal model, built on the foundation of more than 1 trillion parameters, marks a significant milestone in the AI community, promising unprecedented performance levels. The initiative is not just a leap forward in technology; it represents a fusion of expertise from over 50 specialists in the field, making it a beacon of collaborative achievement.

Samba-1 stands out for its unique architectural design, which amalgamates the prowess of 54 expert models into a single, cohesive framework. This integration allows Samba-1 to harness the combined strengths of Large Language Models (LLMs) and the precise capabilities of smaller, specialized models, creating a synergy that delivers enhanced performance across a wide range of tasks. For instance, if a user needs to convert text into SQL, Samba-1 smartly directs the query to a text-to-SQL expert. Any subsequent request for encoding this SQL into another format would then be seamlessly handled by a dedicated text-to-code model. This all happens within a unified chat interface, simplifying interactions and improving efficiency.

Key features of Samba-1 include:

- A singular API endpoint that consolidates numerous expert models, simulating the performance of much larger multi-trillion parameter models.

- Enhanced data security, enabling enterprises to train on their confidential data safely within their own infrastructures, thereby safeguarding against external and internal data breaches.

- A significant boost in performance—up to tenfold—while also reducing costs, complexity, and the managerial burden associated with running such sophisticated models.

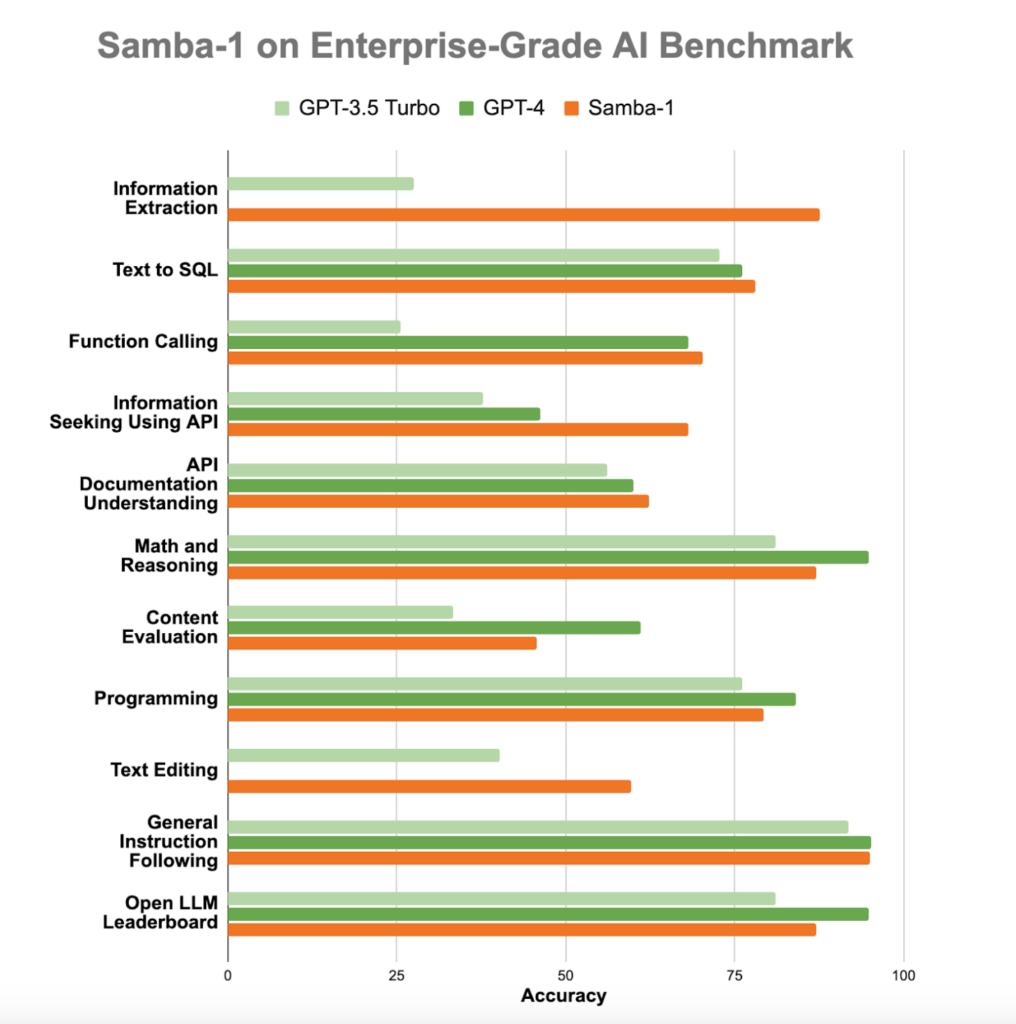

- In benchmark tests, Samba-1 has outshined its predecessors, including GPT-3.5, in all enterprise tasks, and has shown comparable or superior performance to GPT-4 in many areas, albeit with much lower computational demands. This is attributed to its innovative Composition of Experts model that incorporates State Of The Art (SOTA) expert models, enhancing both accuracy and functionality for enterprise applications.

In parallel, the AI realm has witnessed another significant advancement through the Direct Preference Optimization Positive (DPOP) strategy introduced by Abacus AI. This new fine-tuning methodology seeks to address and rectify the limitations of previous techniques, improving the alignment of LLMs with human preferences without compromising on outcome accuracy. DPOP does this by introducing a novel loss function that penalizes deviations from preferred responses, thereby enhancing the model’s efficacy across a variety of tasks.

The impact of DPOP is evident in the performance of the Smaug-Mixtral suite of models, which have been fine-tuned using this innovative approach. Notably, Smaug-72B has become the first open-source LLM to surpass an 80% average accuracy threshold, setting a new benchmark for model performance. These models have not only outperformed others in the HuggingFace Open LLM Leaderboard but have also excelled across several other benchmarks, demonstrating the potent capabilities of DPOP in enhancing AI accuracy and reliability.

This era of AI innovation, spearheaded by SambaNova’s Samba-1 and the DPOP methodology, signifies a transformative phase in the way we approach, develop, and interact with artificial intelligence. By blending the expertise of specialized models with the broad capabilities of LLMs, and by refining the alignment of these models with human preferences, we are stepping into a future where AI’s potential is not just expanded but also more securely and effectively harnessed for a wide range of applications.

Source: Sambanova

Like this article? Keep up to date with AI news, apps, tools and get tips and tricks on how to improve with AI. Sign up to our Free AI Newsletter